|

|

NOTE:This is a read only archive of threads posted to the FreeRTOS support forum. Use these archive pages to search previous posts. New forum support threads can be started at the FreeRTOS forums.

FreeRTOS Support Archive

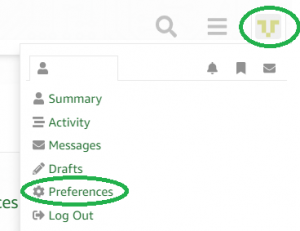

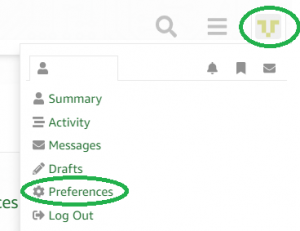

The FreeRTOS support forum can be used for active support both from Amazon Web Services and the community. In return for using our software for free, we request you play fair and do your bit to help others! Sign up for an account and receive notifications of new support topics then help where you can.

This is a read only archive of threads posted to the FreeRTOS support forum. Use these archive pages to search previous posts. New forum support threads can be started at the FreeRTOS forums.

[FreeRTOS Home]

[Live FreeRTOS Forum]

[FAQ]

[Archive Top]

[December 2012 Threads]

Shared Mutex/Queue handler macro or function?Posted by jmmag on December 11, 2012 Hi, I am a little new to the RTOS game and have been writing some standard tasks that communicate with each other by using queues and an attached circular buffer & mutex lock. I have not found any examples of this specific approach, and my current code blocks have become quite large, what with handling failure conditions (!= pdPASS, etc.) and this has driven me to consider just putting the entire block into a function which can be shared by all the tasks, similar to:

int queue_write(*queue, *mutex, *circularbuffer, *message_struct, *input_message_buffer, length){

take_mutex();

write_to_cbuff(circularbuffer, input_message, message_struct);

queue_send(message_struct);

give_mutex();

return pass_state;

}

I just wanted to know if anyone saw any pitfalls to this approach, and if I should just put it into a big macro instead.

Thanks!

Jon

Shared Mutex/Queue handler macro or function?Posted by richard_damon on December 12, 2012 Using a function may increase your stack usage a bit, using a macro will increase your program size a bit, that is the trade off.

I often have an “encapsulation” function for a common sequence of operations.

As to your example, while I know it is just a simplified version of your code, I wouldn’t expect your function to take the “message_struct” parameter. It would take the input_message, and the queue information (queue, mutex, circularbuffer, perhaps wrapped into a single struct), and the write_to_cbuff function copies the message to the slot in the buffer and returns the pointer to the message struct it copied to, which is pushed onto the queue.

I might be tempted to move the mutex into the circular buffer code, as long as it could handle message coming out in a slightly different order than they are enqueued if they come from different tasks.

Also, rather than copying the data into a circular buffer, I often find it better to have a list of buffers, and tasks needing a buffer get one from the list, and pass the buffer address as (part) of the message, and the consuming task then puts the buffer back on the free list, this way I never need to copy the data from one buffer to another like your circular buffer seems to be doing.

Shared Mutex/Queue handler macro or function?Posted by jmmag on December 12, 2012 Thanks for the response, Richard.

As to your example, while I know it is just a simplified version of your code, I wouldn’t expect your function to take the “message_struct” parameter. It would take the input_message, and the queue information (queue, mutex, circularbuffer, perhaps wrapped into a single struct), and the write_to_cbuff function copies the message to the slot in the buffer and returns the pointer to the message struct it copied to, which is pushed onto the queue.

I originally did that because the data going into the circular buffer are just byte arrays, and the message_structs that go into the queue have other information about the message (like message type, source task, etc), beyond just the message length and position in the circular buffer. That way I figured I could avoid dynamic arrays in structs. Though since the queue is really just a FIFO, having the start position in the message_struct is somewhat redundant since I am only reading from the head of the circular buffer every time, anyway. You are probably right in that I should keep the queue stuff in one struct, it seems a bit messy my way.

I might be tempted to move the mutex into the circular buffer code, as long as it could handle message coming out in a slightly different order than they are enqueued if they come from different tasks.

Yeah, I originally thought it would be nice to leave any RTOS code out of simple function like the circular buffer, and wasn’t quite sure the order in which the locking, circular buffer transfer, and queue transfer should take place. Suppose I could always add an extra layer…

Also, what do you think about the potential synchronization issues between the queue and the circular buffer? The corresponding queue_read() function would ideally block on the queue rather than having to take the mutex every time before checking, but of course there is the chance that it could take the queue element and get interrupted by the write_to_cbuff() before it is able to take the mutex. Since right now it’s strictly FIFO I don’t think it should be a problem, but once I start wanting to prioritize messages to the front of the queue, then I will have to consider the synchronization issues.

Also, rather than copying the data into a circular buffer, I often find it better to have a list of buffers, and tasks needing a buffer get one from the list, and pass the buffer address as (part) of the message, and the consuming task then puts the buffer back on the free list, this way I never need to copy the data from one buffer to another like your circular buffer seems to be doing.

So it would essentially be a heap of fixed-length message arrays? Ideally, I wish I could just throw the message_struct and the message array into the queue; that would definitely save me a lot of trouble. But my message arrays vary anywhere from a few bytes a hundred, so any fixed-length solution seemed inefficient to me. And I will probably end up with around 15 different tasks, each with their own message queue…

Thanks again,

Jon

Shared Mutex/Queue handler macro or function?Posted by richard_damon on December 12, 2012 My comment on moving the mutex into the buffer was dependent on the buffer being able to handle “out of order” retrieval operations which is beyond what a “normal” circular buffer would handle, unless it’s size was adequate so you didn’t need to worry about actually keeping track of the taking data out of the buffer. In this case there is no need for the read to do any synchronization, as the queue entry will provide a pointer into the buffer for the data, and this access is read only. Also, normally I have only one reader task for a queue, so there isn’t the same contention issue as the write side. You do need to put the data into the buffer before putting the request onto the queue, and if you want to support the out of order retrieval, the request needs to include the address in the circular buffer the data was placed in.

My other comment was that rather than having each producer have a static buffer where it builds its request, to be then copied into the circular buffer, to then be copied out into a static buffer of the consumer, which is a lot of copying that isn’t really needed, you have the producer start by acquiring a buffer from some store, fill it with data, and then pass the address of that buffer via the queue to the consumer, which processes that data and when done, puts the buffer back into the store. This store COULD be the heap, if you are allowed to use it in the middle of processing. Normally dynamic allocations are not allowed in critical code unless there are fall backs for handling out of memory conditions (like if the producer can hold off the request until more memory becomes available, or report upstream a failure/back off state). I find it is more often a fixed length list of maximum size buffers (where the number of buffers is computed based on maximum system load and processing time). If the messages vary widely in size, it is also possible to have two (or more) stores based on the message size where small messages come from one store, and bigger from another. If you know the distribution of message sizes than this can save you memory. Note that you do NOT need a different buffer size for every size message, it could be a 10 byte buffer for 1-10 byte messages, a 100 byte buffer for 11-100 byte messages, and a 1k buffer for 101-1000 byte messages (or what ever works best with your size distribution of messages and your knowledge of the number of messages of the various sizes you need to have being processed at any given time.

Copyright (C) Amazon Web Services, Inc. or its affiliates. All rights reserved.

|

|