Task A drives the radio-chip, task B displays texts and images on the display.

Task A has obviously the higher priority than task B.

The SPI is guarded by a MUTEX. The application works, and anyone is happy.

Whilst verifiing the software using the trace-facility I could detect a priority inversion.

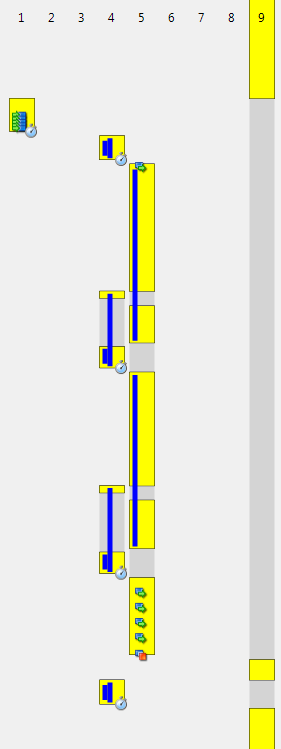

The image below shows this capture. The task A (number 4) attempts to get the MUTEX (blue lines) which is hold by the task B. Task A obviously has to wait until task B gives the MUTEX back. Which it does after it has written the whole character to the display.

Task B will give the mutex back after every character before taking it again. This to make sure task A will get the SPI ressource within a useful time.

To minimize the delay of task A I could of course give and take the mutex between every single pixel I send to the display.

To minimize the delay of task A I could of course give and take the mutex between every single pixel I send to the display.I think this would be a huge impact in performance (confirmed by a short test). So my Idea was to check between any pixel sending to the display whether the mutex is wanted by another task.

I would check if the queue of the mutex is empty or not. When empty I then would give and take the mutex. Although I thing this would be much more efficient I don’t like to access kernel-objects directly (without an OS function).

I think it might be possible to detect whether another task is waiting by using the

xQueuePeek

xQueue